Introduction

According to the ISTQB, “functional testing of a system includes tests that evaluate the functions that the system must perform. Functional requirements may be described in work products (requirements, specification, business need, user story, use case) and in the functional specification. They can also be wholly undocumented. The system’s functions provide the answer to the question of, “What does the system do?” Functional tests have to be performed on all testing levels (for example, system component tests can be based on a component’s specification), so every level has a different point of focus”.

A simple example

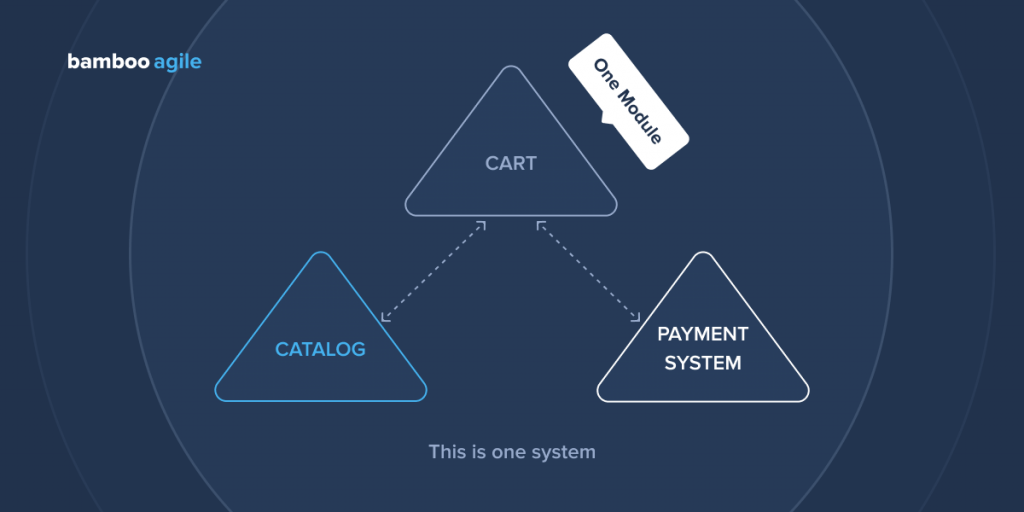

Let’s say a company has developed an online store. This store has the following elements:

- a Product catalogue – this functionality has been prescribed in the requirements;

- a Shopping cart – this functionality has been prescribed in user stories;

- a Payment feature – this functionality hasn’t been prescribed because of the use of a third-party system.

As a result, the testers from the development company are able to answer the question of how the whole system works. But first, they’ll have to answer the said question for each individual function:

- What does the Catalogue function do?

- What does the Shopping cart function do?

- What does the Payment function do?

Next, they’ll have to answer the same question, but in regards to the work of these units as a set:

- The Catalogue function + the Shopping cart function, e.g. adding products from the catalogue to the shopping cart;

- The Shopping cart function + the Payment function, e.g. paying for the products from the shopping cart;

- The Catalogue function + the Payment function, e.g. quickly paying for the products from the catalogue.

In the end, we’ll receive the answer to the question that includes all the units from this example:

- What do the functions of Catalogue + Shopping cart + Payment do?

It’s worth noting that QA specialists will not test usability, security, or performance in this example. All of this falls under Non-functional testing, which tests “how well the system is performing”, according to the ISTQB.

Using this simple and seemingly short example, we tried to show the owners of online stores, startups, or any other functional software products the very essence of Functional testing. But all this is just the tip of the iceberg, and we will dive into the more interesting aspects of Functional Testing down below.

Though, if you don’t have enough time to read this article, just drop a line on av@bambooagile.eu. We’ll discuss your project and form the primary plan for its functional and non-functional testing.

Functional testing types

Functional testing is related to testing types, because types of functional testing are defined based on the set of actions they use to verify the specified characteristics of the system.

The purposes of functional testing types are:

- Evaluation of the functional characteristics of the system’s quality, such as completeness, correctness, feasibility;

- Evaluation of the non-functional quality characteristics, such as reliability, productivity, safety, compatibility, and usability;

- Evaluation of the correctness and completeness of the component’s or system’s structure or architecture, their compliance with the specification.

- Evaluation of the impact that the changes had – for example, confirming that the defects have been fixed (confirmatory testing), and looking for any unintended changes in behaviour caused by the changes in the software or environment (regression testing).

The first point of this list brings us to the concept of functional testing, which in turn stands alongside such types of testing as:

- Non-functional testing;

- White-box testing;

- Change-related testing.

Functional testing may have the highest priority and be the easiest for the client to perceive, but don’t forget that this type of testing is only one link in the chain of testing types.

In a way, functional testing is like a parachute made up of different pieces of fabric. In order for the parachutist (tester) to smoothly and efficiently make his jump into testing, all of them must be tightly stitched together, be able to withstand strong gusts of wind, and form the canopy necessary for a high-quality flight and landing. These scraps of fabric are the various types of testing that the tester relies on during functional and, of course, other functional testing types.

Unit testing

Unit testing is the testing of the smallest functional unit able to work independently.

Let’s get back to the online store example and its Shopping cart. We can see that the “Shopping cart” is a separate unit within the system.

And if the task was to develop a “Shopping cart of an online store” system, then the units within it would be the following:

- “Open the Shopping cart” button;

- “Delete item from the Shopping cart” function;

- “Change the number of items in the Shopping cart” function;

- “Go to payment” button.

It turns out that a layered project structure is important. The smaller the individual subsystem, the better it is for the customer and the developers, as the first goal of functional testing is risk reduction. This refers to the risk of overlooked defects in both the written documentation and the created software.

In general, the purposes of Unit testing are as follows:

- Risk reduction;

- Verification of whether the functional behaviour of the components conforms with the established design requirements;

- Building confidence in the quality of the component;

- Detection of defects in a component;

- Preventing the passage of defects onto higher test levels.

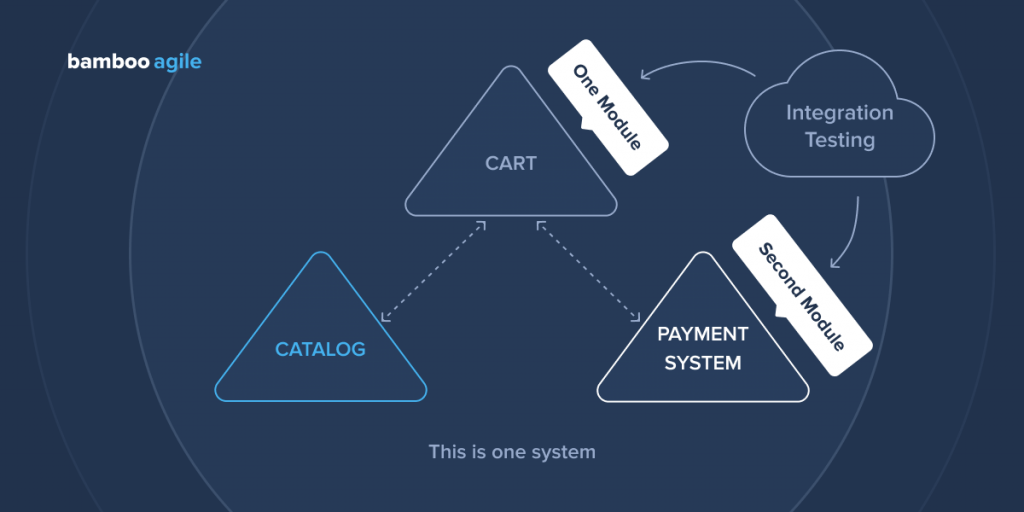

Integration testing

Integration testing is a pretty voluminous term, as it applies to the interaction of both units and systems. This is the next level after unit testing. The purposes of integration testing are similar to the goals of unit testing, but they are more focused on the interaction of interface elements and their correct operation. Said purposes are:

- Risk reduction;

- Checking whether the functional and non-functional behaviour of the interfaces meets the specified design requirements;

- Increasing the confidence in the quality of interfaces;

- Detection of defects (which can be found in interfaces, components, and systems alike);

- Preventing the passage of defects onto higher test levels.

Our example depicts integration testing as the testing of several elements or systems, much like in the case of the “Shopping cart” feature acting as a System.

In Integration Testing, it is worth paying attention to the focus of testing.

- Component integration testing focuses on the interaction of two previously tested units and is often automated.

- System integration testing verifies the interaction of interface elements between off-the-shelf systems and software packages. System integration testing can also capture collaboration and third-party service interfaces.

Let’s get back to the online-store example.

Do you remember all the terms?

- Product catalogue – this functionality was prescribed in the requirements.

- Shopping cart – this functionality was prescribed in user stories.

- Payment feature – this functionality wasn’t prescribed because of the use of a third-party system.

So, an example of system integration testing would be testing the Shopping cart (Shopping cart as a System) + testing Payment (third-party service).

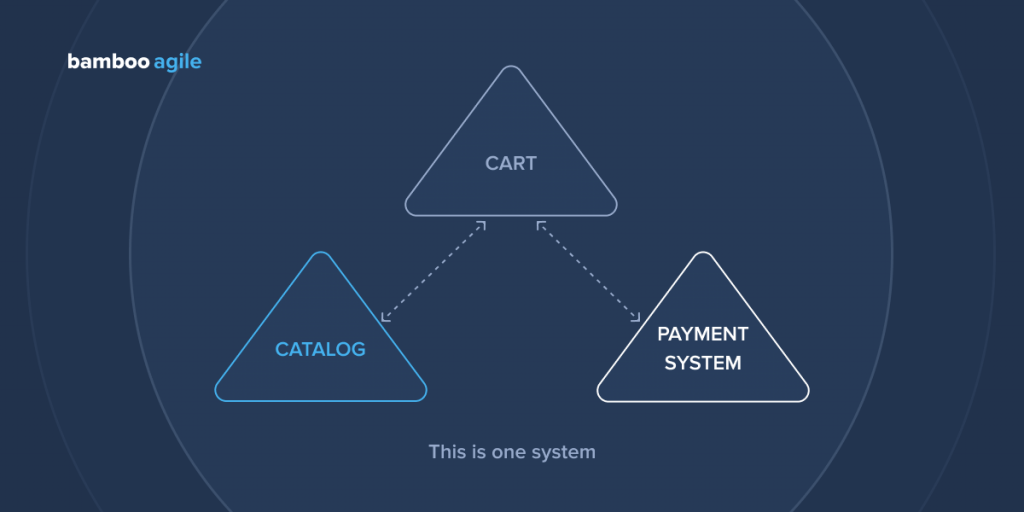

System testing

System testing checks how the entire system operates and relies on previously tested modules, both individually and together during integration testing. Schematically it looks very simple because the whole system is under testing.

You may look at testing levels and their logical relationships in a completely new way after seeing the online store example from this article once again.

Of course, system testing has its purposes:

- Risk reduction;

- Checking whether the functional and non-functional behaviour of the system conforms to the established project requirements, design and specifications;

- Verification that the system is fully implemented and will work as expected;

- Increasing the confidence in the quality of the system as a whole;

- Defect detection;

- Preventing the defects from reaching higher test levels or production environments.

The main feature of system testing is that various stakeholders make release decisions based on these test results.

Interface testing

Testing the user interface (UI) means checking how easy it is to use and whether it meets the specified requirements as well as the approved prototype.

We test how predictable the program’s behaviour is and how interface elements are displayed on various devices when the user performs certain actions. This allows us to evaluate how effective the user’s work with the application is.

Our task at this stage is to identify structural and visual imperfections in the application interface, check the usability of the interface for navigation and the possibility to fully use the application’s functionality.

We check how the interface elements react to user actions and how the application handles keyboard, mouse, or touchpad inputs.

For these purposes, we use several approaches:

- manual testing, which allows us to check the application UI for compliance with the design and prototype layouts;

- automated testing after each product build to identify interface errors and regression bugs;

- conducting focus groups.

Using different approaches in UI testing allows one to test the user interface more thoroughly, improve the quality of the application, and increase usability.

When testing, we take into account the criteria for creating a high-quality interface, namely:

- minimum time for the user to complete tasks;

- minimum number of user errors when working with the application;

- complete understanding of the interface by users and the absence of ambiguities when working with it;

- the minimum amount of information entered by users;

- simplicity and visual appeal of the interface.

To test the UI, we conduct cross-browser and multi-platform testing. As a result, we receive a high-quality application that works on all types of devices and in all modern browsers.

Regression testing

Changes in code inevitably accompany the software development process: implementing new functionality, changing the existing one, and eliminating defects, for example. So the task of regression testing is to ensure that the change does not “break” the working software.

Software changes require regression testing, which consists of the following stages:

- Analysis of the changes and requirements that have been made and the search for areas that may have been affected;

- Compilation of a set of relevant test cases;

- Conducting the first round of regression testing;

- Drawing up a defect report.

Each defect is entered into the bug tracking system, and the steps for its reproduction are described. The description is accompanied by video and screenshots, if possible.

- Elimination of defects;

- Verification of defects.

At this stage, QA engineers check if the defect is actually fixed. If the problem persists, a new report is generated. In some cases, the elimination of the defect is negotiable. All critical and significant defects must be eliminated without fail, but minor ones, the elimination of which is costly, can be left in. Especially in cases where they are not visible to the user.

- Conducting the second round of regression testing.

On average, several rounds of regression testing are required to eliminate all defects and stabilize the application.

Smoke testing

Smoke testing is a type of testing carried out at the initial stage (for example, after a new build) and primarily aimed at checking the readiness of the developed product for more extensive testing, as well as determining the general state of the product’s quality.

This is a short cycle of tests, confirming or denying the fact that the application starts and performs its main functions. This type of testing allows us to quickly find the main critical defects at the initial stage.

It is necessary to determine what tasks need to be solved when using the application, what obvious steps need to be taken to solve the tasks, what important requirements we must comply with and in what order.

To do this, we create a test suite. A test suite is a grouped collection of test cases linked in a specific way. Smoke tests are designed to test basic functionality and should be an integral part of the testing process. They can include answers to something as simple as the question of “Can I register?”. Smoke testing assumes YES / NO answers and all test cases must be passed with a positive result.

Smoke tests should be fast and lightweight so that they can be run frequently. Depending on the specifics of the project, a smoke test can be completed in a few minutes or a few hours.

It should be understood that this type of testing is a type of in-depth product testing. As mentioned above, this type of testing determines whether a product is suitable for more comprehensive testing. If it does not pass smoke testing, the product must be sent back for revision. It is imperative that you record your test results. This is to keep a record of what works and what doesn’t.

Sanity testing

Sanity testing is a very specific test that checks the performance of individual functional elements, systems, web architectures, and calculations. It is carried out with the sole purpose of ensuring that the system being built works 100% as it’s required to.

This type of testing is often performed before the start of the full cycle of regression tests, but only after the end of the smoke test. Traditionally, sanity checks are performed when a minor defect within the system has been corrected or after the software’s logic has been partially edited.

As soon as the changes have been made to the code, the new build of the software is submitted to the testing department for review. Once the build is installed, QA specialists can perform efficient validation of the edited functionality instead of full software regression, which would take significantly longer.

If the fixed bugs and code revisions do not work correctly, then the tester does not have the right to accept the assembly for testing. Note that any defects found at such an early stage can save a lot of time in the process of checking the unfinished assembly.

Key features of sanity testing:

- At its core, it is a surface testing process with a precise focus on the detailed validation of selected functionality;

- This is a quick test to make sure that the changes made to the software configuration are working as expected;

- It is performed to quickly check whether the minor errors in the software have been fixed and did not lead to global configuration errors.

Sanity testing is therefore closely related to configuration testing, which verifies the performance of the system against various combinations of software and hardware (multiple operating system versions, various browsers, various supported drivers, distinct memory sizes, different hard drive types, various types of CPU, etc.). It’s done to find the best configuration under which the system can work without any flaws or issues while matching its functional requirements.

Installation testing

Also known as implementation testing, installation testing checks if the software is installed and uninstalled correctly. This is done in the last phase of testing, before the end user first interacts with the product.

It covers the following procedures and scenarios:

- constructing user flow diagrams and employing automation scripts;

- ensuring that updates to the application are installed in the same location as the previous versions;

- checking the amount of disk space before executing the installation – in case it’s insufficient, the user has to be notified and the installation cannot begin;

- checking if installation works properly on different machines;

- checking if all the files have been properly installed;

- covering negative scenarios, such as stopping the installation in the middle either through user intervention or because of system issues – the user must be able to restart the process;

- verifying registry changes after the complete installation.

Uninstallation testing works similarly, in that it checks if all the files are successfully removed from the system.

Test coverage assurance

Test coverage assurance is the assessment of the system’s test coverage density. It helps QA teams monitor the quality of testing.

A lot of testers base their coverage calculations only on functional requirements. Of course, the application should do what it is supposed to do, first and foremost. That said, if the testing is more in-depth (with independent non-functional testing teams working on performance, security, usability, etc.), testers will have to track their requirements all the way to execution via test coverage analytics.

Some teams consider a requirement covered if there is at least one test case against it. Sometimes, if at least one team member is assigned to it. And other times, if all the test cases associated with the requirement are successfully executed.

For example:

- There are 10 requirements and 100 tests created. If these 100 tests cover all of the 10 requirements, the testing coverage is adequate – at the design level, that is.

- 80 of the 100 tests created are executed, but they target only 6 requirements out of 10. Even though 80% of testing is done, 4 requirements are not covered. That’s coverage statistics at the execution level.

- 90 tests relating to 8 requirements are assigned to testers, but the rest are not. In that case, the test assignment coverage is 80% (as 8 of 10 requirements are fulfilled).

It’s worth mentioning the importance of when testing coverage is calculated. If you do it too early in the process, lots of things will still be incomplete, so you will get a lot of gaps. Therefore, it’s generally better to wait until the Final Regression Build to assess your test coverage in the most accurate way.

Acceptance testing

Acceptance testing is a process aimed at verifying that a software product meets the established requirements. It’s a kind of endpoint testing designed to simulate the intended real-world use of a software application to ensure that it is fully functional and meets the user’s requirements.

Documentation testing often falls into this category, as it involves checking documents for compliance with accepted standards, as well as compliance with certain characteristics.

Aims of acceptance testing:

- Determine if the system meets the acceptance criteria;

- Decide whether to approve a product release or send it back for further development.

Advantages:

- Helps to identify bugs in the user interface;

- Involves actual users that ensure that the application can handle tasks in real-world conditions.

Types of acceptance testing:

- Factory acceptance testing (FAT) is performed by the project development team to ensure that the application has achieved the required level of quality. This type of testing is also called alpha testing;

- Production acceptance testing (PAT) or operational acceptance testing is an important part of the development life cycle and is performed before the release of the product to ensure that its aspects do not affect its functionality;

- Site acceptance testing (SAT) is the testing of software products or related documentation performed by the customer’s representatives. End users verify that the system and its components are working correctly based on the procedures agreed upon with the customer.

Functional testing tools

An important part of testing is the tools used for it. Of course, the devtools panel and raw ingenuity will be enough for superficial testing. But for full immersion in quality testing, and for being able to carry out all functional testing types, you will need:

- TeamCity;

- Selenium Web Driver;

- Firebug;

- XPather;

- IE Developer Toolbar;

- JUnit;

- JMeter;

- VMWare;

- TestLink, etc.

Selenium WebDriver and JMeter deserve a special mention. Why?

Selenium WebDriver is a tool for automating web browser actions. It’s mostly used for testing web applications, but there’s more to it. For one, it can be used to solve routine site administration tasks or to regularly receive data from various sources.

JMeter is a load testing tool developed by the Apache Software Foundation. Although it was originally designed as a web application testing tool, JMeter is now capable of stress testing JDBC connections, FTP, LDAP, SOAP, JMS, POP3, IMAP, HTTP, and TCP.

Imagine yourself in a dark mine (which can be a confusing and incomprehensible project). In the process of testing, Selenium Web Driver will replace your manual prehistoric pickaxe with a modern automatic jackhammer, and JМeter will become a lantern that will easily detect and point out system flaws.

Conclusion

Functional testing is an integral part of the software testing process. It comes in many forms, all of which work towards the same goal: ensuring that the solution works exactly as intended.

In case you want to learn more about functional testing types or about the QA process as a whole, feel free to sign up for a free consultation with our specialists here. We’ll be more than happy to share our expertise!